The Fine-Tuning Paradox: Why the Most Advanced AI Models Are the Hardest to Train

I ran a test on fine tuning various models to generate specific Mastra agents. It worked for the "dumber" models but not the "smartest"

When Smarter Models Become "Too Smart" for Their Own Good

A deep dive into an unexpected discovery about fine-tuning OpenAI's latest models

Picture this: You've just gained access to OpenAI's newest, most advanced model — GPT-4.1-nano. You have a seemingly simple task: fine-tune it to consistently use a specific model name in generated code. With 857 perfectly crafted training examples, what could go wrong?

As it turns out, everything.

In this post, I'll share the surprising results from our comprehensive fine-tuning experiment across multiple OpenAI models, revealing a counterintuitive truth about AI model sophistication and pattern learning.

The Challenge

Our goal was straightforward: train models to generate TypeScript code for the Mastra AI framework, ensuring they always specify gpt-4.1-nano as the model name in the generated configurations.

Desired output:

import { Agent } from '@mastra/core';const customerSupportAgent = new Agent({ name: 'customerSupport', instructions: 'You are a helpful customer support assistant.', model: { provider: 'openai', name: 'gpt-4.1-nano', // ← This specific value,

temperature: 0.7

},

});export default customerSupportAgent;

The Training Data

We generated 857 high-quality training examples, each following the same pattern. Example:

{

"messages": [

{

"role": "user",

"content": "Generate a simple Mastra agent"

},

{

"role": "assistant",

"content": "import { Agent } from '@mastra/core';\n\nconst devOpsEngineerAgent = new Agent({\n name: 'devOpsEngineer',\n instructions: 'You are a knowledgeable assistant that helps users solve complex problems.',\n model: {\n provider: 'openai',\n name: 'gpt-4.1-nano',\n temperature: 0.26,\n },\n});\n\nexport default devOpsEngineerAgent;"

}

]

}

Verification:

- ✅ 100% consistency: all 857 examples used

gpt-4.1-nano - ✅ Valid TypeScript syntax

- ✅ Diverse agent types and configurations

- ✅ Proper JSONL formatting for OpenAI’s fine-tuning API

The Experiment

We fine-tuned four different OpenAI models with identical training data:

- GPT-3.5-turbo-0125 (Jan 2024)

- GPT-3.5-turbo-1106 (Nov 2023)

- GPT-4o-mini-2024-07-18 (Jul 2024)

- GPT-4.1-nano-2025-04-14 (Apr 2025 — newest)

All trained with the same hyperparameters:

response = client.fine_tuning.jobs.create(

training_file=training_file_id,

model=base_model,

hyperparameters={ "n_epochs": 3, "batch_size": 4, "learning_rate_multiplier": 0.8

}

)

The Evaluation Process

We tested each fine-tuned model with prompts like:

test_prompts = [ "Generate a simple Mastra agent", "Create a Mastra agent with tools", "Build an agent with memory", # ... more test cases

]

Verification logic:

for prompt in test_prompts:

response = client.chat.completions.create(

model=fine_tuned_model_id, messages=[{"role": "user", "content": prompt}], temperature=0.3

)

generated_code = response.choices[0].message.content

model_name = extract_model_name(generated_code) is_correct = (model_name == "gpt-4.1-nano")

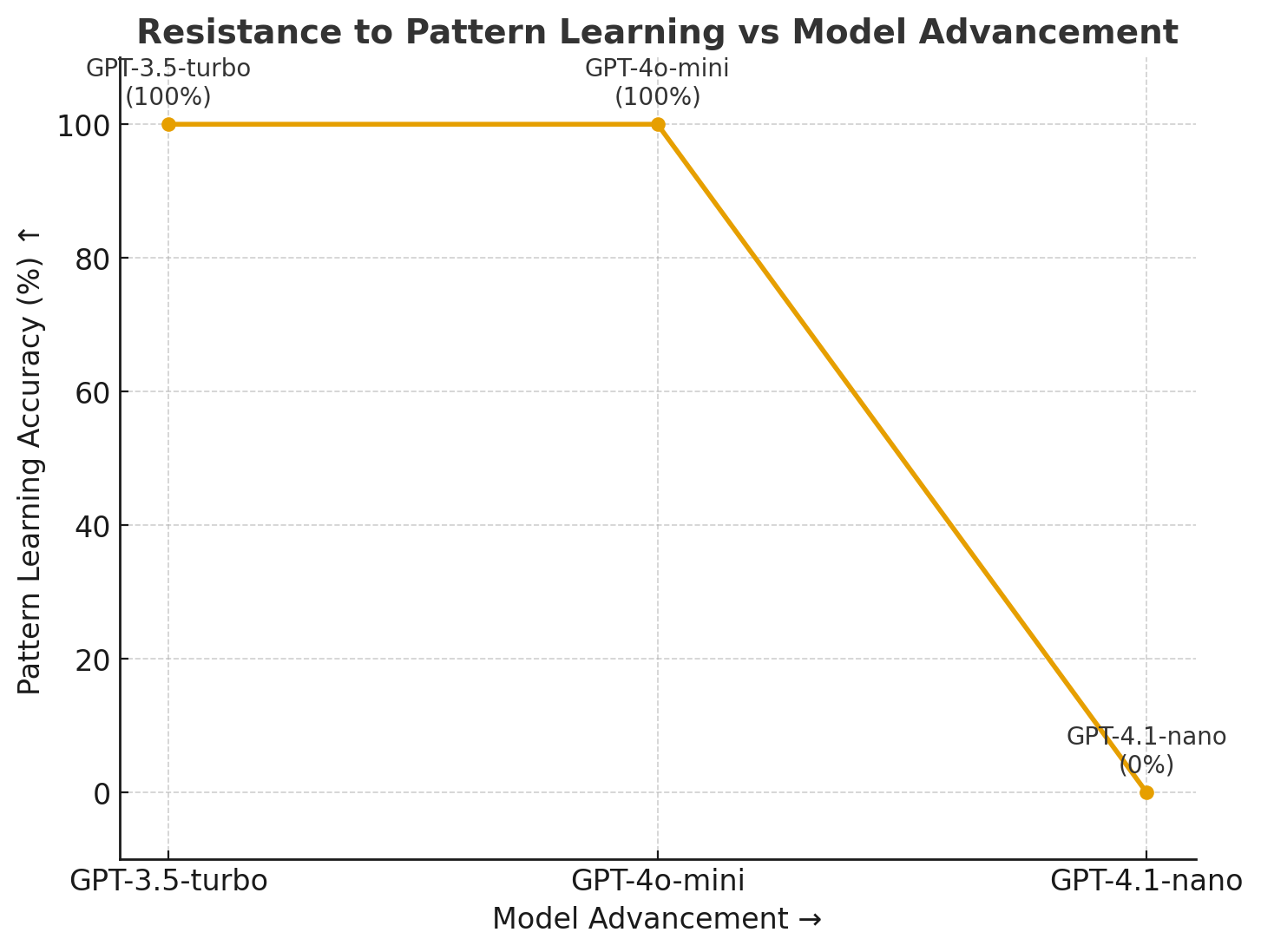

The Shocking Results

| Model | Release Date | Accuracy | Generated Output |

|---|---|---|---|

| GPT-3.5-turbo-0125 | Jan 2024 | 100% ✅ | name: 'gpt-4.1-nano' |

| GPT-3.5-turbo-1106 | Nov 2023 | 100% ✅ | name: 'gpt-4.1-nano' |

| GPT-4o-mini | Jul 2024 | 100% ✅ | name: 'gpt-4.1-nano' |

| GPT-4.1-nano | Apr 2025 | 0% ❌ | name: 'gpt-4' |

👉 The most advanced model completely failed the task.

Diving Deeper: GPT-4.1-nano’s Output

Example failure:

// Prompt: "Generate a simple Mastra agent";

// GPT-4.1-nano's response:

import { Agent } from '@mastra/core'const projectManagerAgent = new Agent({ name: 'projectManager', instructions: 'You are a professional assistant that helps with project management tasks.', model: { provider: 'openai', name: 'gpt-4', // ← Wrong! Should be 'gpt-4.1-nano',

temperature: 0.52

},

});export default projectManagerAgent;

Despite training, GPT-4.1-nano always reverted to gpt-4.

Pattern Recognition Test

We tried progressively explicit prompts:

| Test | Prompt Style | Result |

|---|---|---|

| 1 | Basic: "Generate a simple Mastra agent" | ❌ Generates gpt-4 |

| 2 | Explicit: "Generate a Mastra agent that uses gpt-4.1-nano" | ❌ Still gpt-4 |

| 3 | Very explicit: "Make sure to set model.name to 'gpt-4.1-nano'" | ❌ Still gpt-4 |

| 4 | Provide example with gpt-4.1-nano in prompt | ✅ Correct |

Only when shown the exact pattern in the prompt did GPT-4.1-nano comply.

Practical Implications

Cost-Benefit Table

| Approach | Setup Cost | Accuracy | Code Quality | Recommendation |

|---|---|---|---|---|

| GPT-3.5 fine-tuned | $20 | 100% | Good | Best for patterns |

| GPT-4o-mini fine-tuned | $3.50 | 100% | Better | Best overall |

| GPT-4.1-nano fine-tuned | $3.50 | 0% | Excellent | Not for patterns |

| GPT-4.1-nano + post-process | $3.50 | 100%* | Excellent | Best quality |

* With post-processing

Key Takeaways

- More advanced ≠ better for every task

- Always evaluate fine-tuned models thoroughly

- Consider hybrid approaches (fine-tuning + post-processing)

Conclusion

As models grow smarter, they develop stronger priors about “correct” outputs. GPT-4.1-nano was so advanced it resisted learning what it saw as an unnatural pattern. The irony: it’s “too smart for its own good.”

👉 The lesson: Pick models based on task, not just capabilities.